Demystifying DeepSeek: What DeepSeek means for AI on Mac

In case you haven't heard, DeepSeek has made a serious mark on the AI industry over the past week. Over the weekend, their iOS app became the #1 free app on the App Store, passing up incumbents like ChatGPT and Google Gemini.

Not only has DeepSeek gone viral with consumers, but business leaders are also blaming its release for a steep drop in Nvidia stock prices, as many existing assumptions around AI model training have been put into question. Having been trained for just $5.5 million (according to DeepSeek) and released as free-to-use open-source software, DeepSeek’s R1 model has changed the landscape for AI in general, and represents a landmark in open-source large language models.

Today, we’re going to take a first look at DeepSeek’s models and their implications for AI on Apple hardware overall.

What, exactly, is DeepSeek?

DeepSeek is an AI research lab founded in China as a subsidiary of High-Flyer, a Chinese hedge fund firm. They specialize in the development of general AI models, all of which have been made open source thus far. Their two latest LLMs, V3, and R1, have surged in popularity, with the DeepSeek app for iOS becoming the most downloaded app in the App Store within a week of release.

DeepSeek Models

V3

V3 is DeepSeek’s mainstream base model. Similar to the majority of large language AI models, the response is immediately generated using the provided prompt as context. In terms of general output quality, it scores well, roughly on par with OpenAI’s ChatGPT 4o model and Claude Sonnet 3.5 from Anthropic, with different strengths and weaknesses for specific applications. At 671 billion parameters, it is substantially larger than competing open-source models, such as Llama 3.1 405B, but generally performs better than other open-source models.

R1

Derived from DeepSeek V3, R1 is DeepSeek’s premier model, and the current class leader among open-source models. Like OpenAI’s o1 series of models, R1 uses chain-of-thought reasoning to “think” through responses before answering. However, unlike the o1 series, R1 is transparent about its chain-of-thought process, allowing the user to read through the reasoning outputs generated. Additionally, R1 is open-source and freely licensed, which allows any user or organization to run or fine-tune R1 models on their own infrastructure, without any additional cost. DeepSeek R1 is the same size as DeepSeek V3 (671B parameters), but can take much longer to generate answers, as it always generates “thinking” tokens before generating the response, regardless of the prompt.

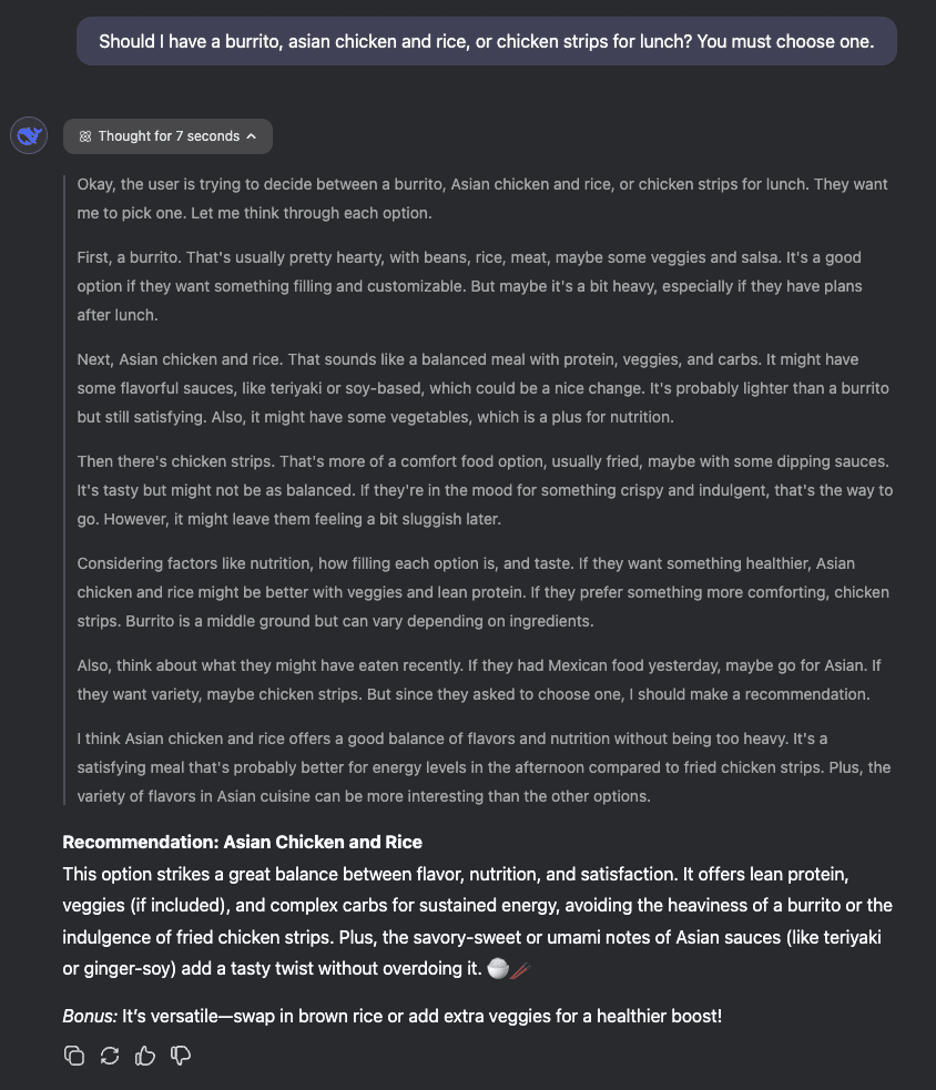

As an example, here is DeepSeek R1 deliberating about what I should eat for lunch:

R1 always “thinks” through its response before writing, even when it’s not necessary.

R1 Distilled Models

Alongside R1, DeepSeek also released a series of distilled R1 models. These models are fine-tuned versions of other, smaller open-source models (Llama and Qwen), trained on the outputs of the full version of R1. As a result of this fine-tuning, these models also use chain-of-thought reasoning to generate their responses, allowing for increased accuracy and quality of responses when compared to the original base model.

These models are much easier to run and allow for reasoning capabilities on lower-end hardware. However, it’s important to note that these distilled models are not simply smaller versions of the full version of DeepSeek R1, even though the outputs can be similar in nature (and improved over the base models). Additionally, as with R1, these distilled models always incorporate chain-of-thought as a part of their response, regardless of the necessity to do so. As a result, they will consistently take longer to generate a response when compared to their base model counterparts (though for complex tasks, the improvement in response quality is worth it).

What Macs do I need to run these?

Currently, the full versions of R1 (and V3) cannot run on any single Mac model without extremely heavy quantization (fewer than 2 bits), which dramatically reduces quality and is not recommended for production use. Even though only 37 billion of the model’s parameters are active at any given time, the parameters used are non-deterministic, so in order to achieve usable performance, the entire model must be available in memory. This would require roughly 350GB-1.3TB of available RAM using standard quantization levels. This does mean that DeepSeek R1 can run on a cluster of Macs. This is something we are working on proving out internally and will have additional information on as soon as we’ve proven out best practices on running clustered models. We expect clustering to become more popular for running these larger models, as the increase in quality is well worth the additional compute resources required. In the meantime, the R1 Distilled models still provide excellent performance with reasoning capabilities. We generally suggest running models at 8-bit quantization for optimal output quality. Here’s a list of our minimum recommended standard models for the most popular Distilled variants:

- DeepSeek-R1-Distill-Llama-8B – M4.S

- DeepSeek-RI-Distill-Qwen-32B – S1.M

- DeepSeek-R1-Distill-Llama-70B – S2.L

These distilled models can be run today on any Apple silicon Mac with sufficient RAM to run the model, using popular frameworks like llama.cpp, Ollama or LM Studio. As we’ve written before in our M4 benchmarks, Mac Studio models are particularly well-suited to running LLMs, as they are equipped with substantially more GPU capacity when compared to the base model and Pro chipsets used in the Mac mini.

Is my data safe when running DeepSeek locally?

When running DeepSeek on dedicated private infrastructure, you have complete control over your data. Much has been said about data privacy concerns around DeepSeek, but these pertain to DeepSeek’s own (China-based) API service and apps, and do not apply to their open-source models.

All of the benefits of having a Private AI server apply just as equally to DeepSeek models as they do to any other open-source models. At small scale, the Mac remains the best platform to run large models with complete data sovereignty, as it allows you to easily and affordably control the infrastructure running the models.

Get AI-ready cloud-hosted Macs with MacStadium

Want to get started with your own Mac cluster? MacStadium can help. Check out our full list of available Macs and get access within seconds.